For those that are still unsure, my last post ‘Microsoft’s Polar Data Centres and World Wireless System’ was posted on 1st April with good reason. It was an April Fool’s posting. I am glad to say Microsoft took it in good humor so I am still an MVP, for now.

The post is the final part in a series of three postings about my experience of moving to the cloud. In part one I outlined my problem. Essentially I was carrying around too much data (10 years of e-mails in a PST, 35G of historical data, much music) on my laptop and it was a data-loss disaster waiting to happen. Other frustrations included:

- time being eaten up in doing regular backups and maintenance

- having to haul my personal laptop everywhere to read my personal e-mail

I signed up to Office 365 with an intention of using this to solve some of my issues.

Part two talked about migrating my 12G pst file across to the Office 365 Exchange server. This was an excellent decision and, four months later, I have no regrets. I still have the niggle of the ‘on behalf of’ for mail sent out on gmail but this pales to the benefits. Mailbox usage is now at 7.4G

meaning I have gobbled up about 0.8G in four months which means I have about seven years to go before I reach my quota.

In this part I will talk about my movement of the 35G of data (plus music).

Going All-In With a Tablet

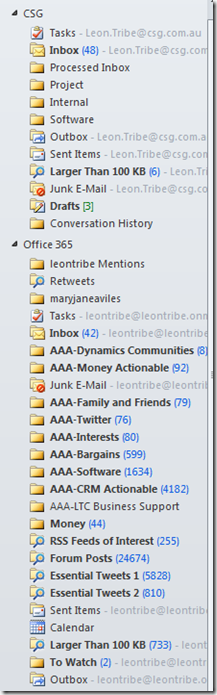

I live in Outlook. It is my one-stop shop for e-mails, rss feeds and tweets. Everything is in the one spot and, with Outlook rules, filtering what is important and what is ‘nice to read’ is relatively simple. I often see tablet devices, such as android tablets and the iPad but, without Outlook, their use for me is limited. Sure, you can read e-mail and then, with another app, you can check your calendar but the ‘dashboard’ of Outlook gives it to me in one hit. For me, it just works. I love the form-factor of these devices but the OS kills me.

To this end, I decided to take the plunge on a PC tablet aka slate. This is a device which looks like an iPad but runs Windows. CSG, where I work, has an annual gadget allowance of $500 so, as long as I could find a tablet for $500 or less, I could test the waters and not be out of pocket.

This gave me the opportunity to test out a site I have known about for a while, AliExpress. This is a retail site for the slightly more well-known Alibaba.com. Alibaba is a front-end for those manufacturing factories in places like China. If you want something, and brand is not so important, you can buy it on Alibaba; from bookmarks and necklaces to high-end electronics, it is there. In the case of Alibaba, you deal directly with the manufacturer and, generally, you will need to buy multiple units. Aliexpress deals mostly in single unit purchases and offers an escrow service as standard. In other words, when you pay, aliexpress holds the money and, when you receive the item and confirm it is what you paid for, they release it to the manufacturer. You get the goods at factory prices and there is no risk; everyone wins.

In the end, for US$472 I got:

- 10 inch touch-screen tablet running Windows 8 Consumer Preview edition

- Intel N570 dual core processor

- Wi-fi, bluetooth

- 2G RAM and 2*32G solid state drives and a micro-SD expansion slot

The memory was a bit low but, of all the machines on alibaba it was either high memory or a multicore processor but not both. My current laptop had 4G RAM but a single core processor and it drove me nuts so I was happy to try this configuration instead.

The transaction went without a hitch and I now use the tablet as my personal laptop. Generally I connect a USB mouse to it as my fingers are too large to interact with the screen on the higher resolutions, but this is fine and there are plenty of mini-mouses on the market. In hindsight I should have got 3G capability over bluetooth (it’s one or the other) but I can still tether to a device via USB so no big deal and wifi hotspots are becoming more and more commonplace.

So what does this have to do with moving the cloud? Well, a few things:

- This is a no-brand machine direct from a factory in Guangzhou, China. Quality is not guaranteed.

- It is running a beta operating system (Windows 8 Consumer Preview)

- It has only got 64G of space

These factors means I have a strong motivation NOT to store any data locally. For what its worth, after a couple of weeks, the hardware has had no issues and the OS has only blue-screened once. With the page file and search index files moved across to the second drive, and Office 2010 installed, I still had about 15G spare on the primary drive, so lots of rooms for programs.

Using the Right Cloud Solution For The Right Data

Another recent purchase was a Windows Phone, a Nokia Lumia 800. As well as the clear parallels in the interface between Windows Phone and Windows 8 Consumer Preview, another clear message is that the SkyDrive is going to be a big part of the plan. If you are not familiar with SkyDrive, Microsoft offers 25G of storage space, in the cloud, for free. The ability to easily access SkyDrive is baked into Windows 8 and Windows Phone with the clear intention that this become the central store for all devices.

There are a few issues to consider with SkyDrive

- There is no local cache of the data, so if you have no internet, you have no data

- File sizes are limited to 100M (this may be increased to 300M in the future)

- It is really only designed to handle certain file types i.e. pictures, videos and office files

The second two issues can be overcome by using Gladinet. Gladinet is a front-end to many different cloud storage services, including SkyDrive. It will auto-chunk larger files to comply with the 100M limit i.e. break them up for storage but still allow them to be re-constructed later and it will allow any kind of file to be uploaded with no auto-conversion which SkyDrive can do for things like picture files.

I got the Professional version through a scheme where you sign up for some online service in exchange for getting the software. In my case, I signed up for a trial online brain-training program and got the software for free. The Professional version made uploading my files much easier via the backup function.

After a couple of weeks, I had filled the 25G of space.

Via Gladinet, I can access this as a drive on my laptop (I have not installed Gladinet on the slate yet, but can still access the SkyDrive easily) and via WinDirStat can browse this drive to see where all the space is being taken up (although, as you can see above, the online interface to SkyDrive is also quite friendly in this regard).

So What About Data You Need When Offline?

One aspect a lot of cloud-zealots fail to cover is the issue of being offline. While SkyDrive is an excellent repository for archived data and things you need when online, there are some data you need offline. For example, I have a list of blog topics in a Word document. If I am on a flight with a few hours to spare, it is likely I will not have an internet connection but could use the time to write a blog article. This is where Mesh comes in. Mesh is part of the Microsoft Live Essentials. Mesh gives you 5G of additional storage which can be accessed in the cloud (think of it as a mini-SkyDrive) but it also syncs with a local folder on a PC or laptop.

I imagine SkyDrive and Mesh will eventually merge but, at this stage, this is not the case.

So, all up this gives me 30G of data for free, all in the cloud with the option of 5G of it being synced to a local folder. This is 5G short of my 35G of data which means I can use a third-party product like dropbox and a-drive, review whether I really need all my data or wait until Microsoft offer additional SkyDrive storage for a price. As you can see above, almost 5G of my SkyDrive is taken up by ‘Multimedia’. This is photos and videos of the kids. There are plenty of sites out there which house this for free so I am not overly concerned.

What About The Music?

For my music collection I am using Google Music, now Google Play. This lets me load up to 20,000 songs for free, all of which can be played via a browser. Although there is only an app for Android, I can happily play my music via Internet Explorer on my Windows Phone and set the web page as a tile, turning it into a pseudo-app. Hopefully, Google will extend Google Play to cover home movies, meaning I will have a one-stop shop for my music and movies. For those of you outside of the USA, when you initially sign up for Google Play, Google checks whether you are connecting from a US machine or not. If not, you are not allowed to use the service. Using TOR servers and web proxies do not fool it. Therefore, you may need a friend in the USA to help you with the initial log in. Once you set it up that first time, Google never checks your location again.

Conclusions

While it is still early days, I could not be happier with my decision to ‘go cloud’. E-mail, past and present, is available anywhere. My large backlog of data is only a browser away and, for those data which I need offline access for, this also is being auto-synced to the cloud and to all my other devices (personal slate, work pc, wife’s laptop etc.) Any one of my devices could catch fire tomorrow and I would not care. Also, moving to a new device is simply a case of telling it about my Office 365 account, telling it my live ID (for SkyDrive) and setting up Mesh. All the associated file movements happen automatically.

If you are considering doing something similar the barriers are quite low from a cost perspective (all of this is costing me just US$6 per month for e-mail and nothing for data/music) and there is nothing stopping you having your data in both SkyDrive and on a local store to see how it goes. My recommendation is give it a try and, like when you buy insurance, my prediction is you will feel a zen-like relief that all the worries are now someone else’s problem.

![clip_image002[6] clip_image002[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhIbmjsERe5xSRekA0HIVOXlPbX9rBcVtPF1piRTbCq867UltxXl_8bpcFtjX0AYIErWpumae5VQUuvALCQju5-nuavhvfp0hcXH2vrxHXjOhZWhrdebNv5bTfGe0fZQLHCjufu2auvwtGt/?imgmax=800)

![clip_image003[6] clip_image003[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhjyhaSRJQRs9oeiemDTFY57S4FAN8HltQ82goXcZlfHCyWvS_2zctfNGfZHwBl6bL10aFlysEcT_Xnpol-qqGAi1pOTpgp8v8RWHLr6mt6LykFCptXHQldTJH0Bul9HmVbZ6hZotwDbK7D/?imgmax=800)